This blog post will not cover the step by step instructions on how to do a site recovery. Please review Technet documentation for that. https://technet.microsoft.com/en-us/library/gg712697.aspx#BKMK_RecoverSite

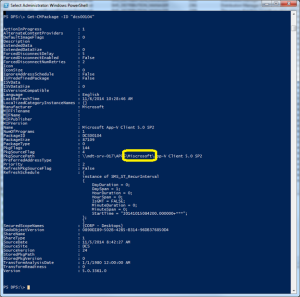

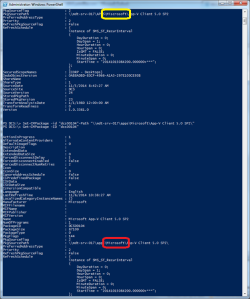

This post will cover how to recover from a failed installation of a service pack upgrade. Upgrades can fail for many reasons, mine failed on the CAS because my remote desktop session was terminated while in the middle of SP1 for R2 install. My site setup is CAS and two Primaries so my recovery process will be a little different than most as I can use the primary sites as reference sites and no need for a database to be restored manually.

So which media do you use to recover the site? New SP1 media or the old R2 media?

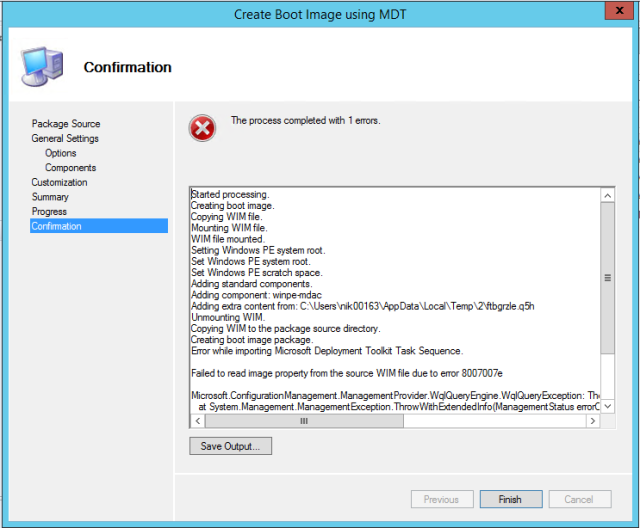

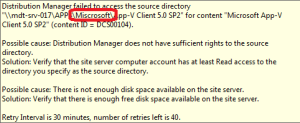

Using R2 media gives an error stating downgrade is not supported. Using SP1 media will succeed but as you’ll see later you’ll run into issues.

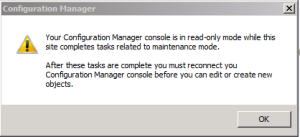

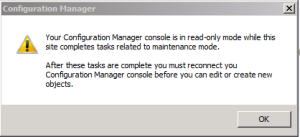

First you’ll notice that when you open the console it will be in read only mode due to site server performing recovery tasks.

I forgot to take a screen shot but it looks the same as the maintenance mode error below.

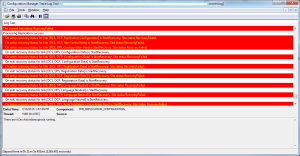

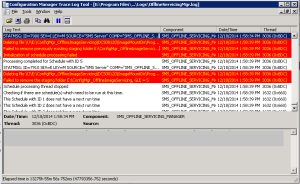

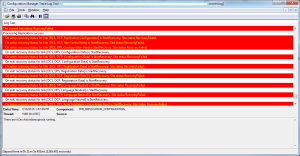

Read only mode basically means something is not right with database replication. Check RCMCTRL.log mine was filled with recovery failed errors.

On entry: recovery status for link [xxx, yyy , Configuration Data] is StartRecovery. Site status RecoveryFailed.

I figured it just takes time for the replication to complete so I let it go for awhile and still no changes.

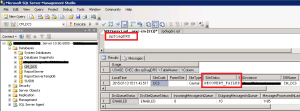

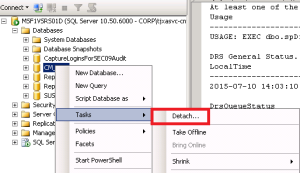

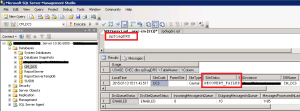

Next, Log on to SQL Server Management Studio and run spDiagDRS by selecting DB > New Query and execute spDiagDRS stored procedure.

Site Status will show RECOVERY_FAILED

I knew this was happening because SP1 makes a lot of changes to the database and the most likely issue is the database entries from the Primary (not SP1 yet, remember upgrades need to be done top down) are no longer the same as what the CAS is looking for.

Basically I was stuck in a situation where I can’t install the older version of ConfigMgr and recover because downgrades are not allowed and the newer version doesn’t recognize the older database. I couldn’t uninstall the CAS and start over because doing that requires uninstalling the working Primary sites first.

Now what?

My choices are do a full system state restore for my CAS and cross my fingers it works and then recover site. I didn’t want to go this route as it takes forever to get this accomplished at my organization and doesn’t always work as planned.

Only choice left was to hack away at the registry. (I called MS support before I did this to make sure they were ok with this approach and it’s my dev environment so wasn’t too concerned if it failed)

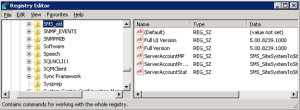

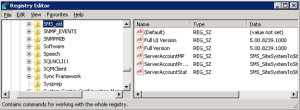

Find: HKLM\Software\Microsoft\SMS

Rename Key(delete if you are braver than me): SMS to SMS_Old

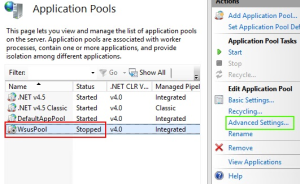

Uninstall console if newer version of console was installed.

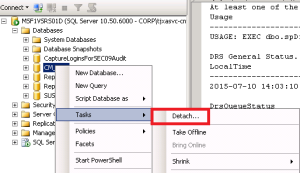

Detach Database from SQL server.

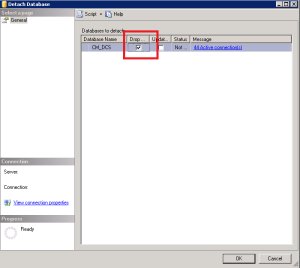

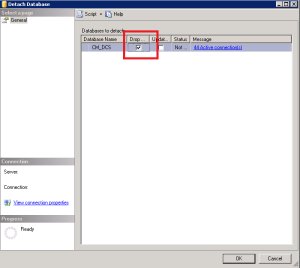

Select Drop connections and OK

Move, rename or delete database .mdf and log .ldf files. I chose to move the files just in case I needed to bring the existing DB back.

Now with old installation information cleaned up. Do a site recovery from the installation media for R2 and follow all post installation tasks. Now it will let you do a recovery without downgrade errors and database will replicate successfully.